- #Parquet file extension how to#

- #Parquet file extension update#

- #Parquet file extension driver#

- #Parquet file extension series#

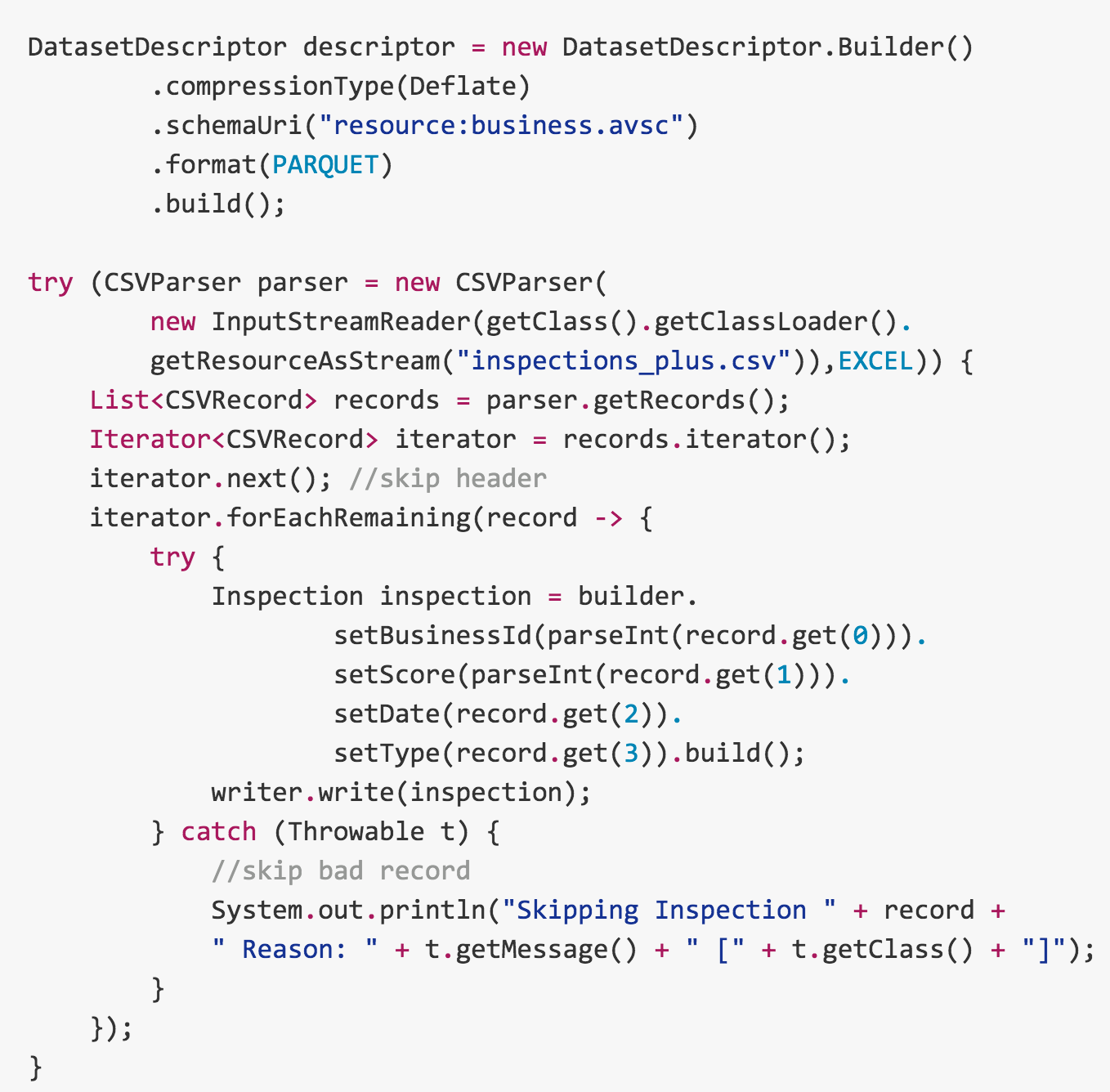

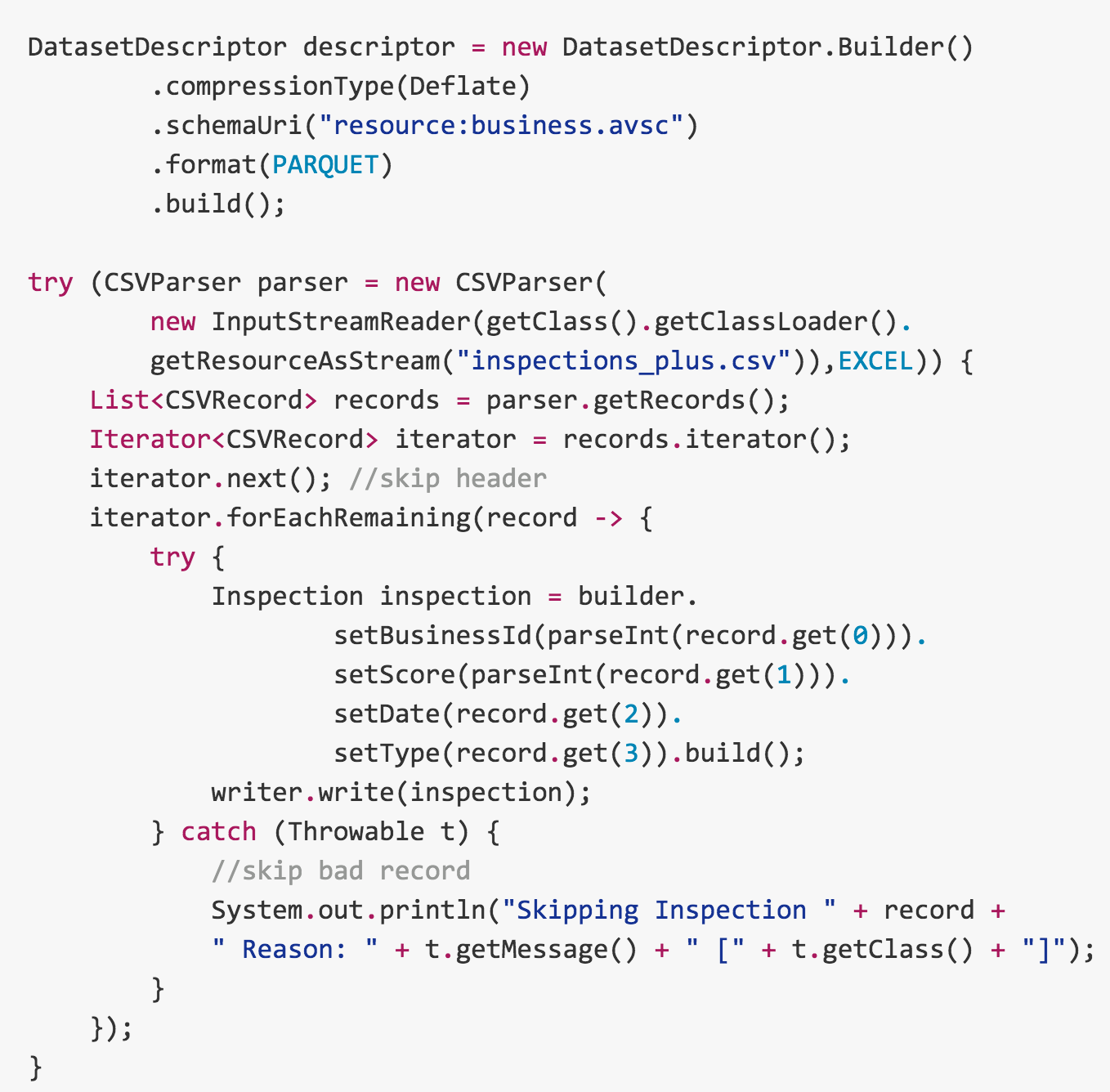

Using parquet partition is recommended when you need to append data on a periodic basis, but it may not work well to update or delete existing data. Each online help file offers extensive overviews, samples, walkthroughs, and API documentation. For example, you can use parquet to store a bunch of records that look like this: You could, in fact, store this data in almost any file format, a reader-friendly way to store this data is in a CSV or TSV file. represents the data as an array, map, or struct. Here’s what some data in this schema might look like in a CSV Create a simple job that uses the Hierarchical Data stage and the XML Parser step to parse employee data, which is stored in one XML data file, into two flat files. parquet extension, which can be stored on AWS S3, Azure Blob Storage Parquet Back to glossary. Query run time is slow because of the row-based search. While you could try to query these small Hierarchical data is a common relational data pattern for representing tree-like data structures, such as an organizational structure, a project breakdown list, or even a family tree. #Parquet file extension series#

So we could create either a hierarchical or grouped time series forecast. parquet -f pandas Parquet is a columnar format that is supported by many other data processing systems. For this article, we will focus on the strictly hierarchical one (though scikit-hts can handle the grouped variant as well). To load a hierarchy of parquet files located in folder data/, run. Alternatively, you can extract select columns from a staged Parquet file into separate table columns using a CREATE TABLE AS SELECT statement. Working together, we proposed the Parquet Modular Encryption (PME) as a solution to address the issues of privacy and integrity for sensitive Parquet data, in a way that won’t degrade the performance of analytic systems. China’s data law will soon include a hierarchical data classification management and protection system. Spark SQL provides support for both reading and writing Parquet files that automatically capture the schema of the original data. When reading Parquet files, all columns are automatically converted to be nullable for compatibility reasons.

csv file which contains the pipeline activity. Additional information can be found in the Microsoft documentation link.

About Hierarchical Data Formats - HDF5. The Hierarchical Data Format version 5 (HDF5), is an open source file format that supports large, complex, heterogeneous data. Parquet files can be stored in any file system, not just HDFS. Write a DataFrame to the binary parquet format. Parquet has been a de-facto format for analytical data lakes and warehouses. #Parquet file extension driver#

The driver offers three basic configurations to model object arrays as tables, described in the Parquet operates well with complex data in large volumes. It can be done by calling either SparkSession. HDF5 uses a "file directory" like structure that allows you to organize data within the file in many different structured ways, as you might do with files on your computer. Each simulated annealing move in Parquet-3. Spark SQL provides support for both reading and writing Parquet files that automatically preserves the schema of the original data. Two new drawing tools for making hierarchical diagrams have been recently developed.For this scenario, I have set up an Azure Data Factory Event Grid to listen for metadata files and then kick of a process to transform my table and load it into a Query time is about 34 times faster because of the column-based storage and presence of metadata. You can query the data in a VARIANT column just as you would JSON data, using similar commands and functions. Databricks also provide a new flavour to Parquet that allows data versioning and “time travel” with their Delta Lake format. Data streams, logs or change-data-capture will typically produce thousands or millions of small ‘event’ files every single day. Parquet hierarchical data Parquet schema.

#Parquet file extension how to#

This ends up a concise summary as How to Read Various File Formats in PySpark (Json, Parquet, ORC, Avro). # use the Schema to read the CSV File#=Ĭase 2: – Let’s say – we want Spark to infer the schema instead of creating the schema ourselves. StructType(List(StructField(id,StringType,true), Occupation = StructField("Occupation",StringType(), True) Case 1: – Let’s say – we have to create the schema of the CSV file to be read.

0 kommentar(er)

0 kommentar(er)